In this interview, we sat down with our Chief Scientific Officer Gerhard Backfried to discuss fake news. While answering our questions, Backfried focused on the following areas:

- The origin of fake news

- The relationship between fake news and social media

- Ways how open-source intelligence (OSINT), machine learning (ML) and artificial intelligence (AI) can help with combating fake news

Moreover, Backfried also answered our questions concerning the future of the relationship between AI and the news industry and spoke about his newest book chapter on fake news, which he co-authored.

According to your perception, what does "fake news" actually mean?

Backfried: There are so many different definitions of fake news, but all circle around the concepts of manipulation and distortion of information with the aim to:

- confuse people,

- create some sort of illusion of consensus,

- to cast doubts or to drown out other voices.

From a computational point of view, we tend to look at it as computational propaganda – a subset that can be created and distributed by algorithms.

Do you believe that fake news is a concept that has always been part of disinformation communication or is it a new and more powerful tool that arose with the emergence of new media?

Backfried: Fake news has always been with us, and propaganda has always been around for as long as we have been communicating. Regarding the technicalities today, what happens is that social media have enabled them to be used on a much broader scale.

That allows to push them out on a massive scale and, at the same time, to much a more targeted population. By profiling and using technologies associated with big data, you can create specific profiles and different people can get very different messages. The core idea, though, is always the same:

- manipulation,

- and sending slanted, wrong or outright false messages.

What are the social and technical aspects of computational propaganda?

Backfried: Computational propaganda is the use of algorithms, automation, combined with human curation to create, manage and distribute misleading information. This is done on all kinds of media and combines social and technical aspects. The technical aspects would be algorithms, agents, platforms, big-data methods, statistics or ML. The social aspects relate to the human actors and their motivations, their agenda and social interactions. Both aspects need to be addressed to handle and counter fake news!

Computational Propaganda

Technical Aspects

Social Aspects

We have also witnessed a shift from an information economy to an attention economy. People want to be recognized and receive feedback, especially so on social media. Therefore, they are willing to communicate and pass-on all sorts of information, even sometimes at the expense of not even having read them. And, on the other hand, what they read and what they want to read often just reinforces what they believed in in the first place, a typical filter-bubble. These bubbles can also exist without social media, but again, I believe that social media provide a good base to make them stronger.

According to your experience, do you think that the social and the technical aspects could then be addressed together?

Backfried: It is not only that they can be addressed together, but that they have to be addressed together. Fake news is a very interdisciplinary phenomenon, and it is best tackled by an interdisciplinary approach.

We have also witnessed a shift from an information economy to an attention economy. People want to be recognized and receive feedback, especially so on social media.

Gerhard Backfried

For example, if you view it only from a computer scientist’s perspective, we can calculate bot factors, which are useful and important, but this alone is not going to make fake news go away. Political scientists, on the other hand, can investigate possible strategies behind such fake news, but only this by itself also will not be sufficient. The topic is actually so broad and interdisciplinary that it requires a corresponding approach to counter it. I am deeply convinced of that.

Since AI can be used to create fake news itself, how can it be used to detect them?

Backfried: Like many technologies, it has two sides.

For example, in the case of feedback and comments to posts. If I want to appear as thirty different persons commenting on a post about a new policy, then I can use technology to create thirty comments that on the surface may look different. Meanwhile, the same technology can be used to find out that what we see is not from thirty different authors, and there is really just one author behind them. So very often the same thing can be used for both processes.

Which OSINT tools are applicable for fighting fake news in real-time?

Backfried: Real-time behaviour is very important for early detections. Otherwise, the spread is immediate. Something goes viral, and it is too late!

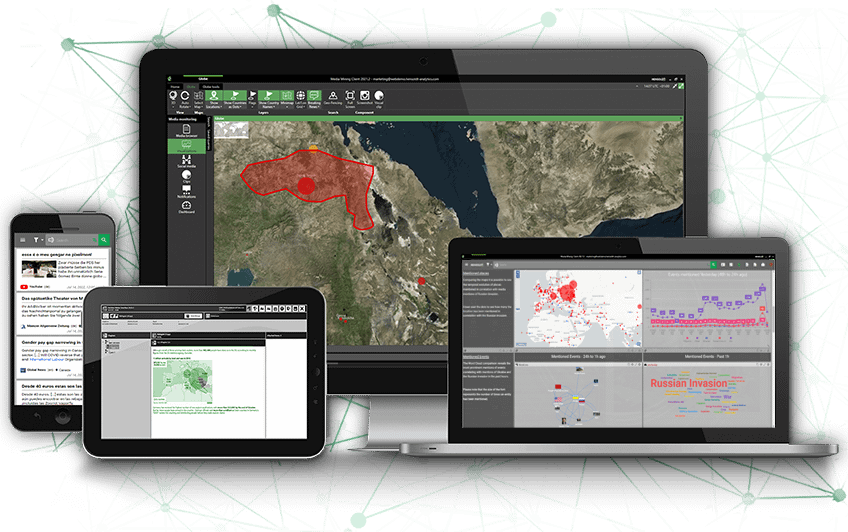

Through OSINT, you can benefit from a cross-media, cross-platform and multilingual approach—finding out how some news distributes and how information circulates over different accounts and platforms. Finding patterns is also tremendously important, and this is what we can actually help with through the HENSOLDT Analytics multi-source intelligence platform.

It is not that humans could not do that, but you could not do it in such a short time and with that amount of data. So, it is actually a case where we are using computers for tasks that they are very good at. Then, the humans get into the loop with tasks that they are good at—like putting matters into a larger cultural or social context.

Do you think multilingualism is important?

Backfried: Absolutely. In Austria and Germany, for example, we have a large number of second and third-generation migrants coming from countries like Turkey. These people live over here, but they also watch Turkish TV, listen to Turkish radio, and so on.

Some of them are still allowed to vote in Turkey. The government there will use that fact and try to influence them. Without multilingual technologies, we will not know what is communicated and how things are presented to them.

This is also the case for Germans who emigrated from Russia to Germany. They consume Russian propaganda, which is tailored specifically for them.

But, of course, this is not limited to the context of migration. It is equally important to be able to scan what your EU neighbours are saying about certain topics which may influence your own people.

What are the biggest technical challenges regarding the detection of fake news?

Backfried: I believe this is not black and white. Maybe some superficial fake news can be detected quickly, but very often at the end, even when you investigate, there are these cases where it is impossible to decide whether something is fake or true.

So, it is not only about classifying “fake” and “not fake”. It is also about how to support people and how to point out things that do not add up. Algorithms can help with that concerning the content, the sources and the patterns of how news spreads.

We can rank accounts and documents or create indicators and help in visualizing things. But the people producing fake news and the algorithms they use also get better. So, it is a kind of an arms race between the groups producing fake news and the groups trying to combat them.

How Technology Helps Detect Fake News:

- Defining how content spreads and the patterns of the spreading

- Tracking sources of fake news

- Ranking accounts and creating documents to visualize data

- Identifying indicators that something is fake news

Do you believe then that we will always rely on human perception with AI and there is not an automatic solution?

Backfried: I do not foresee a fully automatic solution in the near future. Fakes are getting better as well, and we are already talking about deep fakes. Especially when it comes to the visual process, we as humans are quite bad in detecting fakes.

Technologies will certainly get better and help us, but AI is no substitute for critical thinking. It will support us but will not spare us to think about what we read or pass-on.

Technology worldwide is advancing quickly. Do you think the ethical discussions and law issues are advancing at the same velocity?

Backfried: Definitely not. Ethical discussions are catching up, but it depends again on where you are for starters. I believe that interdisciplinary helps because politicians or social scientists can bring in a different mindset complementing the view of others, like computer scientists. This is a great combination.

Technology is very fast. Ethics lags behind, and law is much more behind them. And it is probably going to stay like that.

Do you see this as a threat to democracy or the rule of law?

Backfried: Yes. If you take away the basis for open and free discussions, this will make certain voices remain silent. I do not believe that Europe is very polarized because we are also very diverse.

But the US, for instance, is actually a pretty bad example of where this might lead to. People just want to get their own opinions heard and reinforced. And freedom of expression for some does not translate only to the right to be heard but almost also to the right to silence others.

Do you think that people should be more educated about artificial intelligence?

Backfried: AI is not going to take away the need for critical thinking from us. So, it is still something that needs to be developed and extended.

Media literacy is also something that is mentioned very often in this context. Being able to reflect a bit more about what we are reading, and most importantly, what we are we passing on and under which circumstances could start at school.

Inside the computer science classes that our kids take at school, where they learn about Word, Excel or programming, they should also be encouraged to develop critical thinking in the use of social media.

- How are we responsible for its use?

- What are the dangers out there?

- What problems could be created?

This would for sure deliver a good strategy.

Could you tell us a bit about your book chapter regarding fake news?

Backfried: It is a chapter inside a book on information quality released in March 2019. We dedicated it particularly to the fake news phenomenon.

With my HENSOLDT Analytics colleague Dorothea Thomas-Aniola and a friend from Romania who runs his own company dedicated to fake news detection, we put our heads together. My friend has some good ideas about automatic measures that can be used to detect fake news. So, together with him, we wrote a little section about the history of fake news and automatic ways to detect them.

The book’s title is “Information Quality in Information Fusion and Decision Making”, published by Springer.

According to your opinion, what could the future bring for the interaction of AI and news?

Backfried: There are a few things already in progress, such as articles being written by AI methods, AI to counter fake news, and AI to make certain mechanisms of distribution more transparent.

If we can have more transparency about where things are coming from and how they are being communicated, this would be great. This could be supported by better visualizations in finding hidden connections about media. I also believe that there will be a lot more natural language processing (NLP) employed in a variety of fields:

- The processing of multimedia news, as well as

- The processing of chat-bots or personal assistants for improved interaction.

- The processing of natural language

After all, the most natural way for us, humans, to interact – will impact many industries, especially the news and media domains.

Gerhard Backfried

Chief Scientific Officer

Gerhard Backfried is one of the founders and currently holds the position of Chief Scientific Officer at HENSOLDT Analytics. Gerhard has worked in the fields of expert systems for the finance sector and personal dictation systems (IBM’s ViaVoice). His technical expertise includes acoustic and language modelling as well as speech recognition algorithms. More recently, he has been focusing on the combination of traditional and social media, particularly in the context of multilingual and multimedia disaster-communication. He holds a master’s degree in computer science (M.Sc.) from the Technical University of Vienna with a focus in Artificial Intelligence and Linguistics and a Ph.D. in Computer Science from the University of Vienna. He holds a number of patents, has authored several papers and book chapters, regularly participates in conference program committees and has been contributing to national and international research projects, such as KIRAS/QuOIMA, FP7/M-ECO, FP7/SIIP, H2020/ELG or H2020/MIRROR.

Request a Webdemo Account

If you are interested in discovering the demo version of HENSOLDT Analytics, please fill in the form.

Take a look at our Privacy Policy.

You can change your mind at any time by clicking the unsubscribe in the footer of any email you receive from us, or by contacting data protection officer.

Please find out about your rights and choices and how we use your information in our Privacy Policy and Cookie Policy.