In this interview, Mark Pfeiffer Chief Visionary Officer at HENSOLDT Analytics answered questions regarding the origin of Fake News, how Open source intelligence (OSINT) and Artificial intelligence (AI) can help and how does Fake News affect his personal life.

Do you believe that Fake News is a concept that has always been part of disinformation communication, or is it a new and more powerful tool that arose with the emergence of new media?

MP: Even though the term Fake News is quite new, it is something that has been around for a long time. The phrase itself popped up in the news only dozens of months ago, but it has always been an issue. It has changed its name, although it has not changed its format. It is pretty much the same as we have seen over the centuries, Fake News has always been there and it is something that our clients have to deal with. Therefore, I would say that it is just a new terminology.

Although, nowadays with the democratization of media it is much cheaper for actors to implement and disseminate such information quickly, which could have an immediate impact.

We have always been aware of media influences and various propaganda being done, however, in my opinion, any information or any data is valuable. Even though something is not true, it reveals a certain intention of the actor to propagate certain elements in the communication with regard to opinion shaping.

Does Fake News also affect your personal life?

MP: In my opinion, people overshare things and are much more gullible, which I find quite distressing. Regarding myself, it leads to many, many new conversations and discussions about what is true and what is not. Regrettably, people do not question news as much as they used to.

The fact that some information confirms what people had been thinking does not make it automatically true. I believe that questioning media and their validity is something that needs to be done.

If there were perfect OSINT and AI solutions for detecting Fake News, do you think they should be accessible for anyone for free?

MP: From a humanist point of view, I would say yes, it should be free for all. Although, from a business point of view, people who would work on countering Fake News through AI would need to be paid. Therefore, freely available is probably a better term, meaning that it should be available to all those who are interested in using this kind of solution and I think we as a company are very reasonable when it comes to price.

Do you think that social media providers should be more active in promoting truthful news through developing algorithms that will examine the content of the news?

MP: This is a difficult proposition. Social Media providers operate in a much less regulated space and act according to their profitability. After all they are commercial enterprises and this is the way they are set up. In contrast to traditional media where broadcasting spectrum is closely tied to regulations and also limited in most cases by technically reachable geography where this does not apply. Personally I know that it is possible for these actors to weed out large parts of fake or misleading news, which we saw as soon as the cost of not doing it reflected poorly with their investors. In my personal opinion, this should not be the regulative force when it comes to a very powerful information distribution platform.

According to your opinion, what could the future bring for the interaction of AI and news?

MP: This is a very broad question and I´ll only touch on some aspects. On the creation side, we will see more and more AI-generated content, maybe not to the degree Max Tegmark describes in Life 3.0: Being Human in the Age of Artificial Intelligence, but we will see more of it as AI moves more and more into this space.

Regrettably, much of this will move, or better said, continue to move towards polarization and radicalization, as both of these feed interactivity as well as spread. So the somber middle will continue to decline, especially if we let algorithms geared toward spread and interaction continue their task unchecked.

The results of this most people finally saw latest in 2020, where now just about everything is attributed to a movement or a political view, and I mean this strictly from an observing view. This environment enables actors to use this even further by introducing even more content and using this ecosystem to meet their goals. This is something hybrid actors have been dreaming of for decades and for years now even their wildest dreams are becoming reality at the cost of societal fabric.

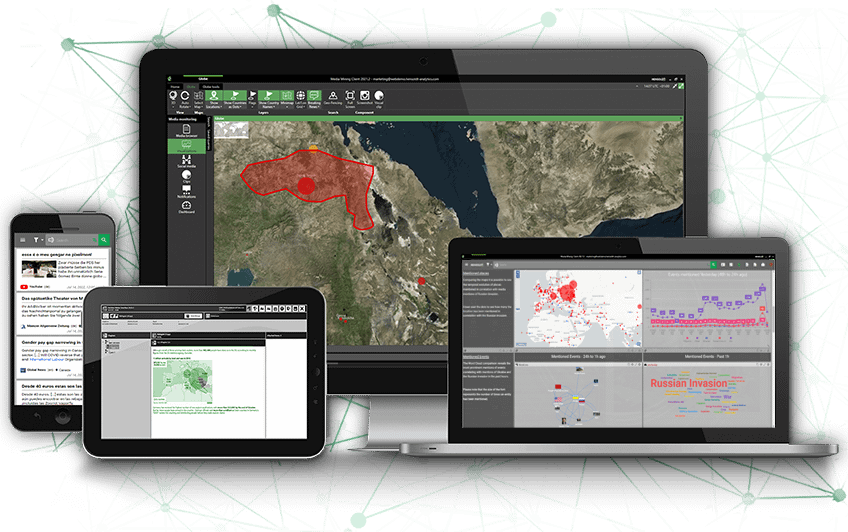

This led us to do something in this area. After all, in our original name (SAIL LABS*), AI was part of the founding letters and stood for artificial intelligence, already in 1999. So our team, and especially our Research team, have had these issues on their radar and had looked for solutions.

We hosted a conference on Fake News in 2018 to get together great minds. Among them Peter Cochrane, who has done extensive work and thinking on a Truth Engine, and others with the same issues and input to it. We looked at ways in which we could contribute to protecting society, or at least making awareness easier.

This is on one side a technical challenge, where we can contribute greatly, but also a societal challenge, where media literacy and paths to it are a governing responsibility that should be ingrained deeply. For this, I would highly recommend Mary Aiken´s Cyber Effect book. The author is also a clever mind who we had the pleasure of interacting with at Interpol 2019.

Putting all these experiences and ideas together and thinking in creative ways, led us to create the project i-unHYDE (intelligent unmasking of Hybrid Deception). We proposed it to the Code2020 innovation prize and we are proud to have made second place with this idea. We were even prouder that, despite us not knowing what the opening words of Annegret Kramp-Karrenbauer, German defense minister would be, they would turn out to be exactly the challenge for which we envisioned the solution.

So rounding up, AI is neither good nor bad. It depends heavily on what we allow it to be used for. We at HENSOLDT Analytics have the overarching claim to detect and protect, and this fits perfectly as to how we believe AI should be used.

*In January 2021, SAIL LABS Technology GmbH was acquired by the sensor specialist HENSOLDT and became HENSOLDT Analytics.

Join HENSOLDT Analytics in our next OSINT webinar!

Mark Pfeiffer

Educated in Germany and the United States in economics and aeronautics, Mark is HENSOLDT Analytics Chief Visionary Officer. He has spent more than two decades consulting in those fields and works in many nations across the globe primarily in Europe, Africa, Asia and the Middle East. His clients include various international government bodies as well as governments and participants of the air transport system. He joined SAIL LABS (now HENSOLDT Analytics) in 2003 also as a shareholder and has built up the governmental partner network and business, spanning most of the globe. In 2006 he co-founded the EUROSINT forum as a venue for exchange of ideas and concepts. His trainings in the OSINT domain are highly customized to the clients’ needs and he can draw from many years of customer interaction.